NeRF - Neural Radiance Fields (review)

Ben Mildenhall

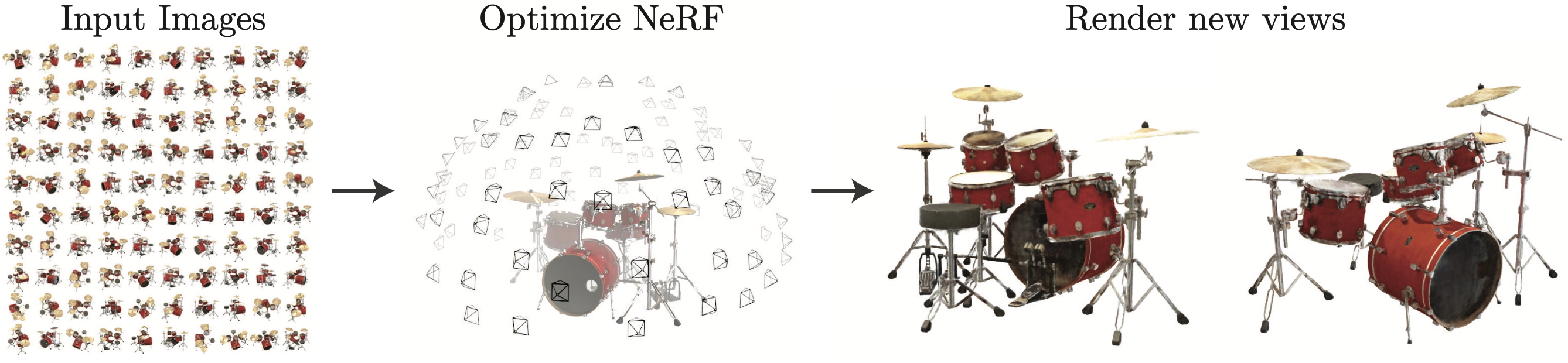

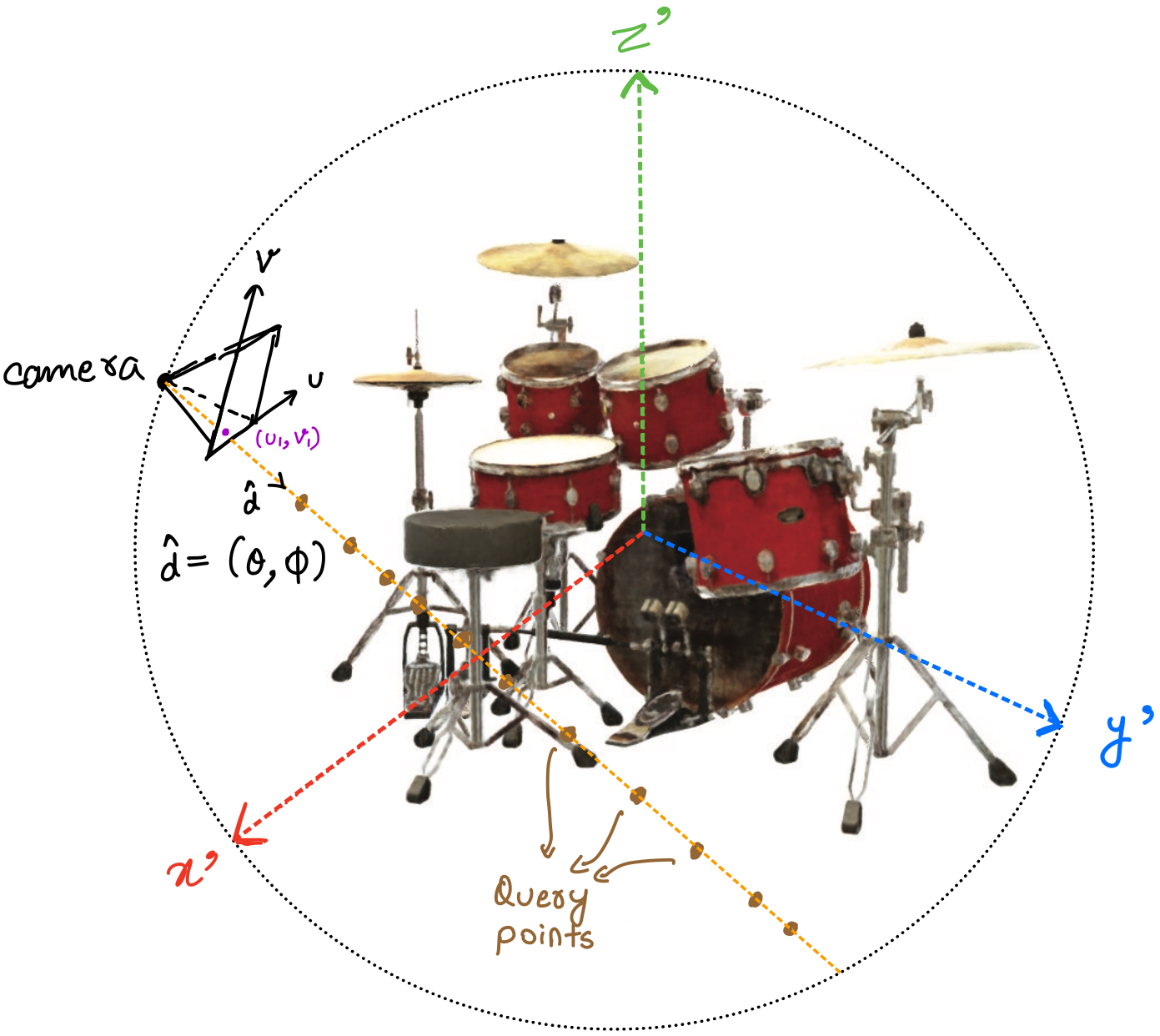

We wish to obtain views from new viewpoints of a scene from a set of 2D RGB images (about 20 to 100 images). Figure 1 gives an excellent visual explanation of the task.

Just to be absolutely clear, I will list down the training data and the output of the task separately:

Training data: A set of

Output: For any new viewpoint not in the training data, we can obtain the image for the scene as seen from that view

Show-n-tell

Nothing better than seeing some awesome results to motivate this blog! Please see the videos in full-screen mode for a better experience.

What do we mean by viewpoint

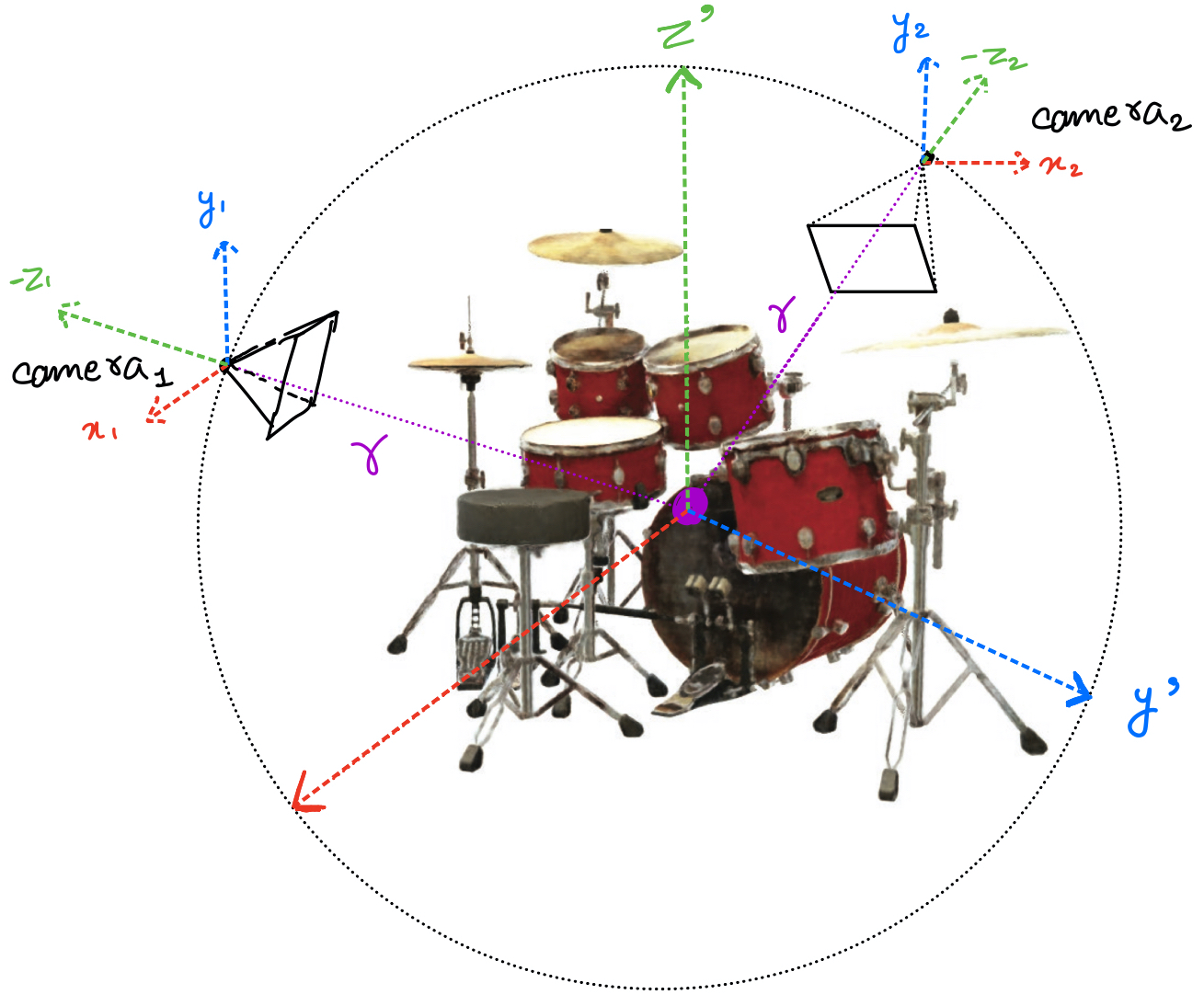

A viewpoint means the position of the camera with respect to the scene. Figure 2 shows two different viewpoints as camera

To completely define the viewpoint, we only need to define the angle of the line joining the camera to the centre (since the distance of the camera from the centre is always

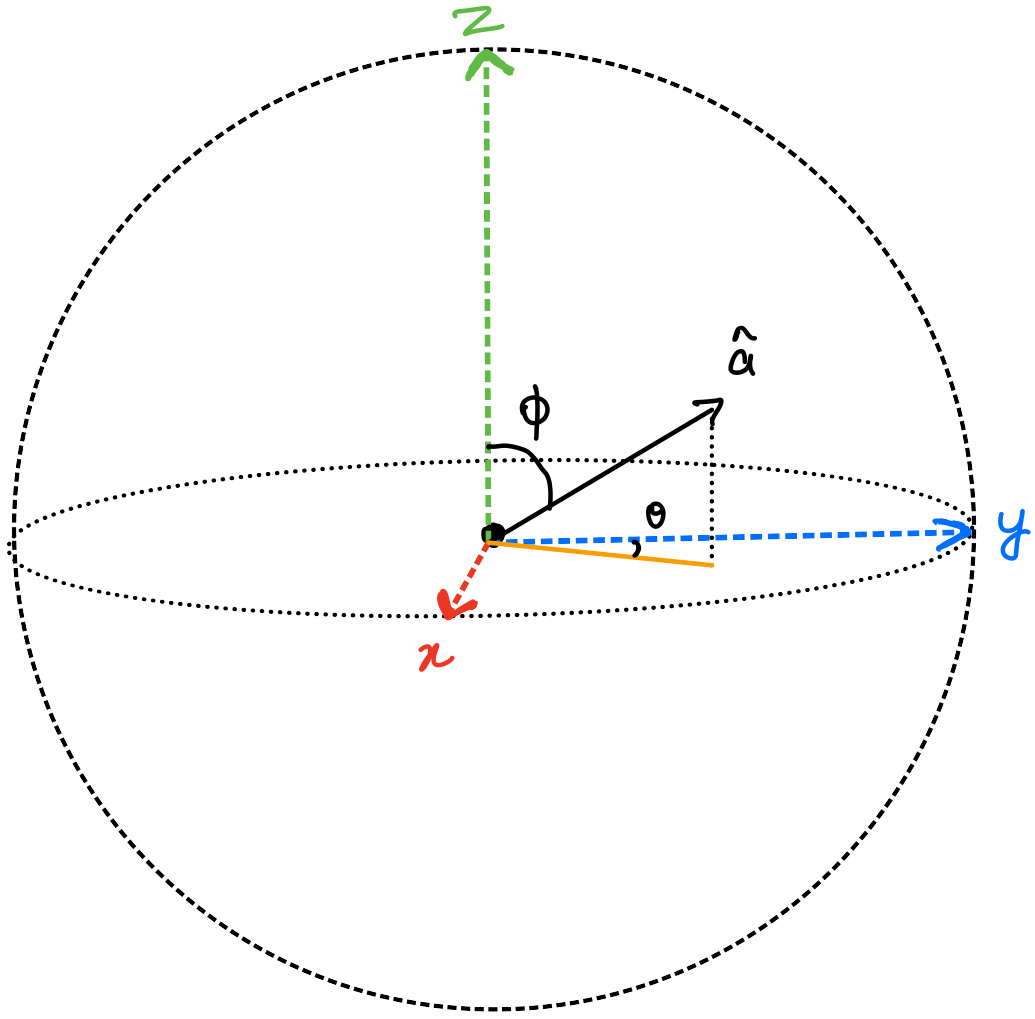

To define the angle of any direction

In summary, a direction vector can be completely defined using a

In total, we will have as many

Highest level overview

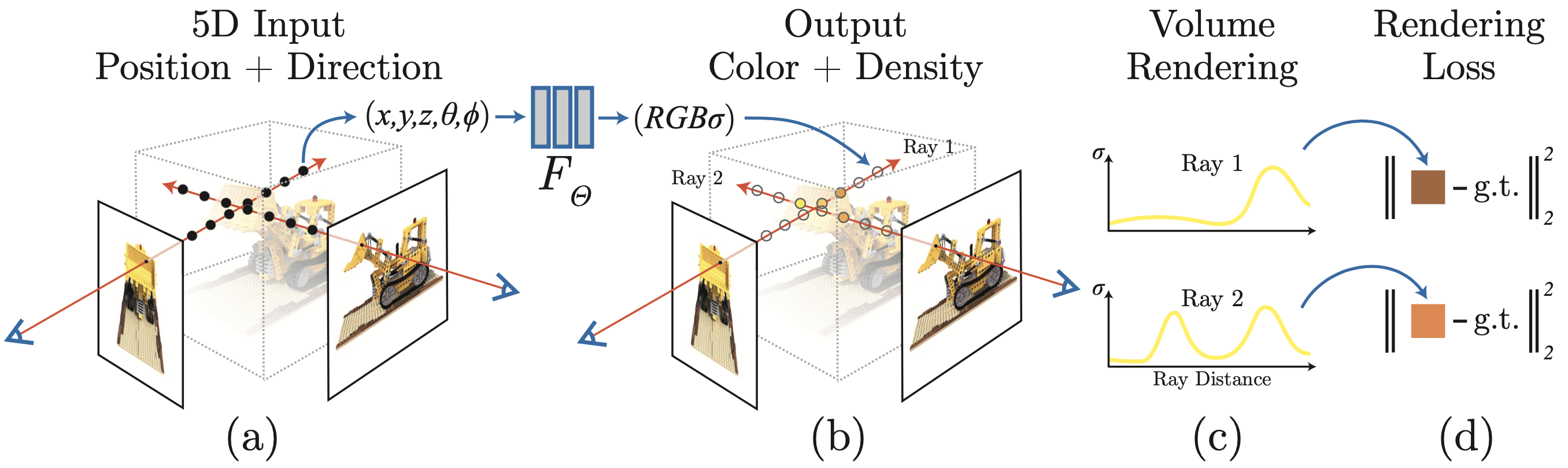

Now that we are clear with the inputs and what we want to achieve, we can start diving into the ‘how’. Figure 4 shows a high-level overview of the method.

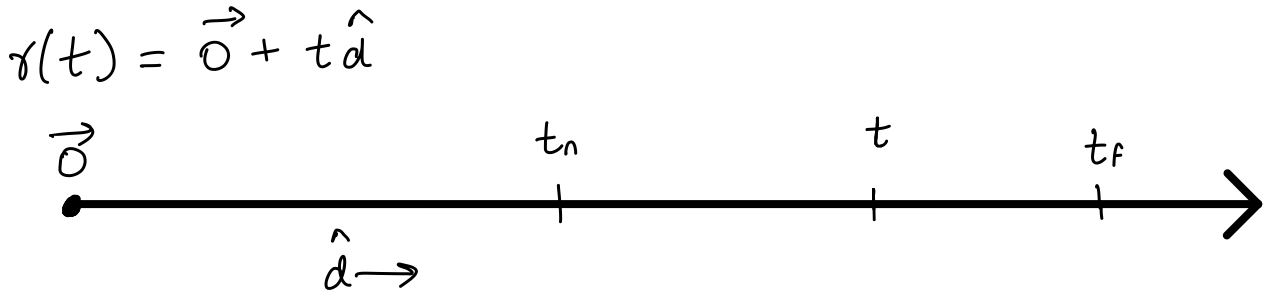

Let’s see a step by step breakdown of how to interpret figure 4. Please refer to figure 5 while reading the description below.

For each pixel

- Imagine a ray of light in the

- This ray will hit several points of the scene. The colour that we finally observe at

- We use an equation to combine the colours of all the points along the ray according to their opacity.

- We compare the calculated value of the colour of the pixel

Now that we have the basic overview of how NeRF works let’s dive into a bit more detail!

Adding details

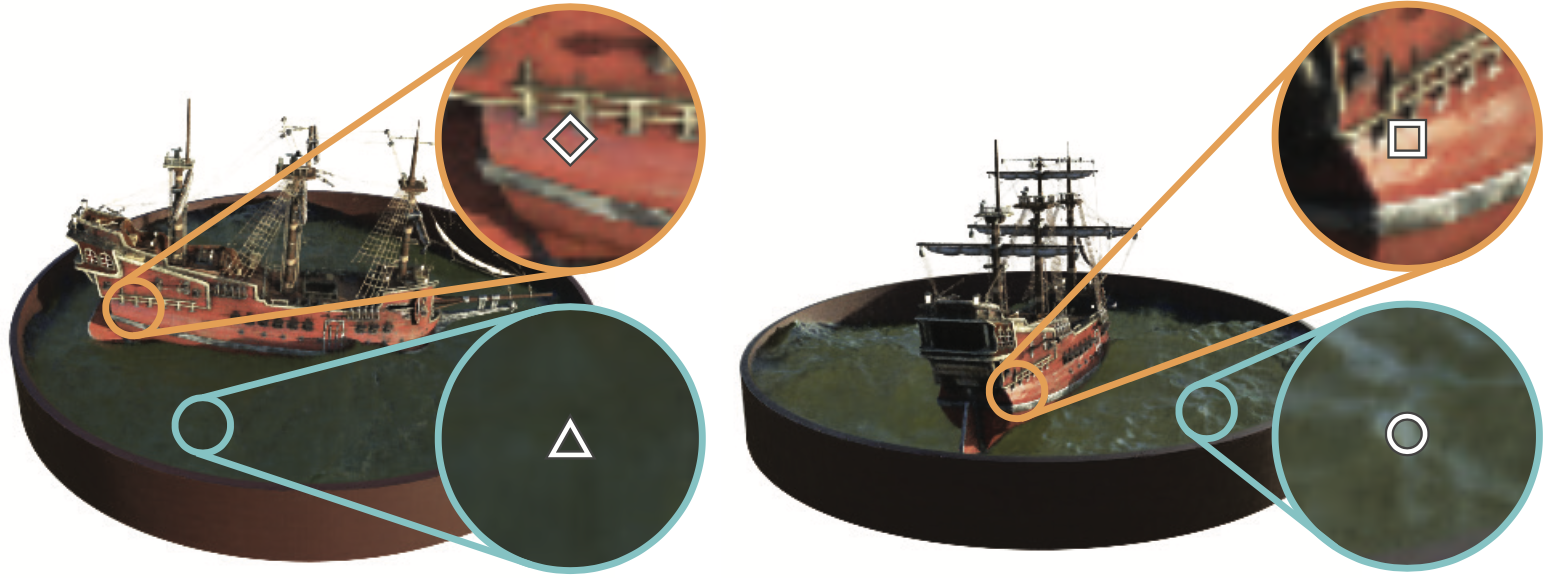

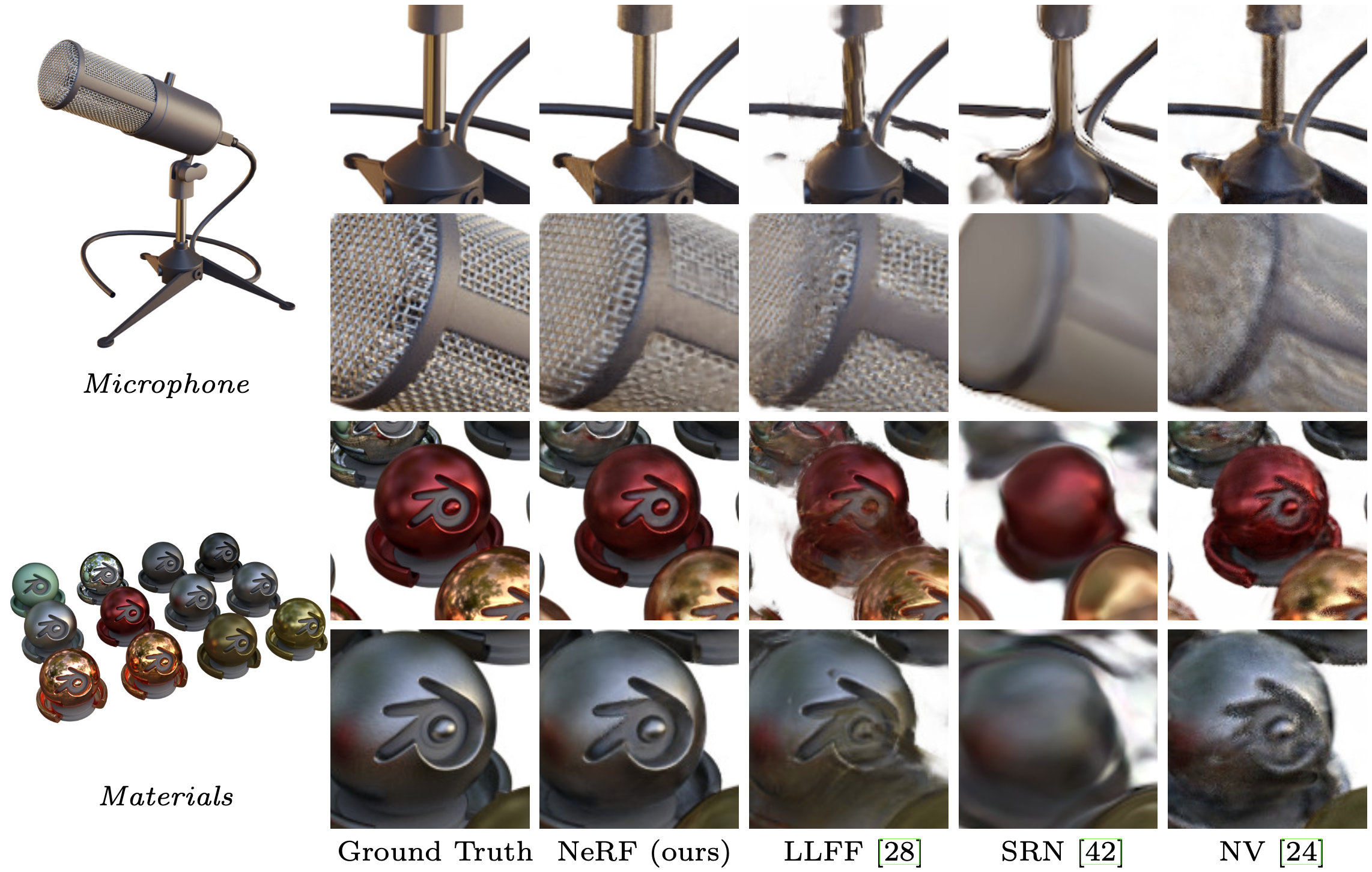

- Intuitively, the colour of any point in the scene should depend on where the camera is (shadows, reflection etc). Therefore, the colour is not only a function of the coordinates of the point

Figure 6 - How the colour of points changes with viewpoint.

Figure 6 - How the colour of points changes with viewpoint. - The amount of light that any point reflects, refracts and transmits is an inherent property of the point and should be independent of the viewpoint. Thus the density

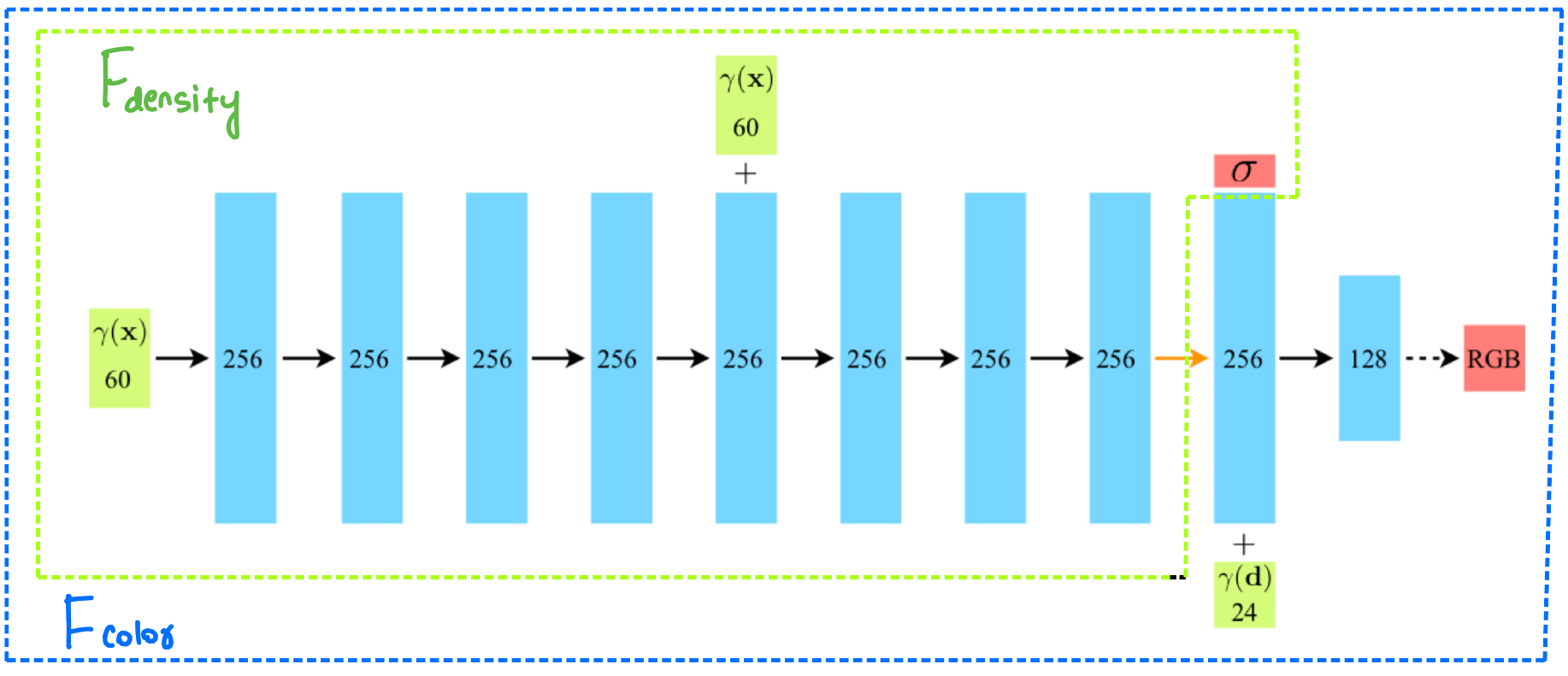

How to get colour at a point?

As touched upon in points 2 and 3 of the high-level overview, to get the predicted value of any pixel, we need two things -

- The colour of a point (RGB value)

- How much light it allows to pass through it (depends on the density

And as we discussed in the previous section, the colour is a function of both coordinates and viewpoint

We will now use a fully-connected neural network to approximate the functions

The notation used in the figure is slightly different, with

We can again observe in the network architecture figure that

For now, please ignore the

Combining the colours along a ray

Please recall point 3 of the high-level explanation, corresponding to the volume rendering step in figure 4. We now have to combine the different colours of the points (that the ray hits) according to their densities.

Now, I’m going to write a scary (but intuitive) equation that we will use to get the expected colour of a camera ray

Please note that a complete understanding of this equation will off-road us from the main point of the paper. For the more interested readers, I will be explaining this equation, called the rendering equation, in another blog post.

For now, we only need to have an intuitive understanding of the terms here. The input

Note to keep in mind:

For efficiency reasons, we render the scene from our eyes to the source instead of the other way around. This is called backward tracing, and is almost always used in practice.

So, why do we observe colours? It is because the light from any light source is reflected partially in our direction. Intuitively, the colour that we observe for any object should depend on three factors -

- The intensity with which the reflected light reaches us (depends on the density of objects in between)

- The material properties of the object (density) to reflect light

- The colour of the reflected light in the our direction

The function

The volume density

The term

And now, as promised, here’s the blog on the fun physics behind the rendering equation!

Integral as a sum

Intuition of the equation? ![]()

Let us see how to evaluate the rendering equation. We replace the integral with a sum over some chosen discrete points between

where

Discrete sampling

The last question that we wish to answer is how to sample points in [

NeRF uses a stratified sampling approach (Wikipedia), which is jargon for dividing the population into bins and sampling uniformly from the bins. The advantage of stratified sampling is that it ensures that each bin has some representation in the final answer.

Here is the same thing, but in math. Here N denotes the number of bins and one point is being sampled uniformly from each bin:

Summary till now

We can now sample points in a range and use the rendering equation to combine the colours of these points. Doing this will give us the predicted colour value of a pixel. We can then apply our

Clever tricks

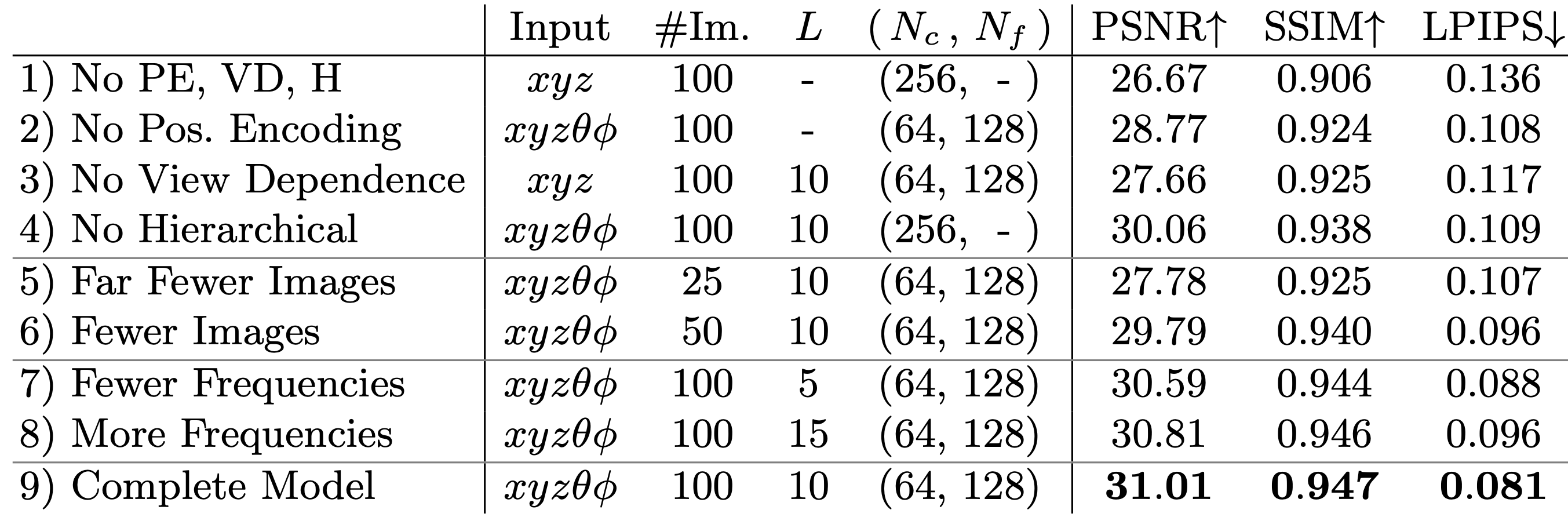

1. Hierarchical sampling

Well, it turns out that with typical large values of the number of points sampled,

To increase efficiency, NeRF uses a two-step hierarchical approach to sampling that increases rendering efficiency. The motivation in breaking down sampling to a two-step process is that we wish to allocate samples proportionally to their expected effect on the final rendering.

In the first coarse pass, we will sample a small number of points

In the second fine pass, we will use the regions where the good points lie to sample more points

Note that NeRF simultaneously optimizes two networks: one coarse and one fine.

2. Positional encoding

Let us come to a neat little trick that NeRF uses. The motivation is that the scenes are complex functions. To reconstruct such scenes, the neural network needs to be able to approximate high-frequency functions. Neural networks in their raw form are not so good at doing this [2]. The paper suggests that after mapping the 5D input (

Let’s first see how the positional encoding is used:

For any scalar

All this is well and good, but is this really important? Boy, you’re in for a surprise! Let’s compare the results with and without using the positional encoding:

The idea of positional encoding is not new. It is already widely used in NLP, specifically Transformers. However, the drastic improvement here is quite astonishing to me!

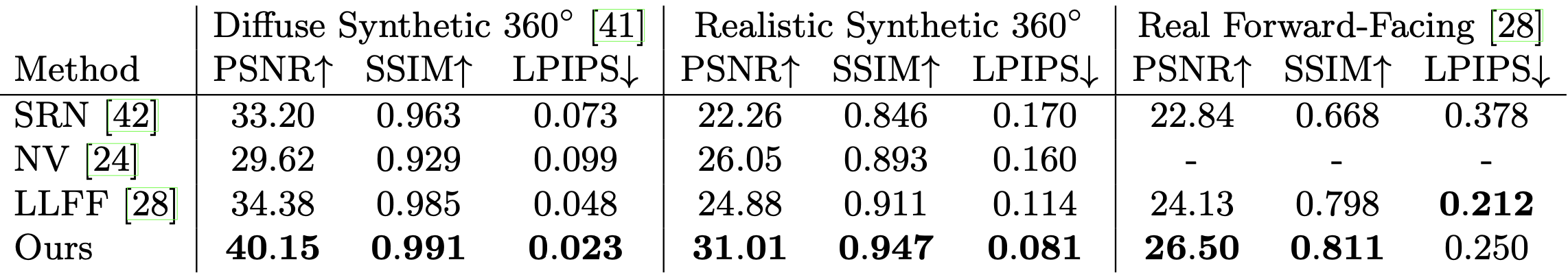

Results and comparisons

Merits

- high-quality state-of-the-art reconstructions

- does a great job with occluded objects and reflections from surfaces

- accurate depth information for any view can be extracted using

- 3D representation size is ~5MB, which is lesser than the storage required to store the raw images alone!

De-merits

- the network is trained separately for each scene

- training takes at least 10 hours for a single scene

- without the positional encoding, the quality of the reconstructions seems to dwindle drastically

Implementations

References

- NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis [Arxiv]

- Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains [Arxiv]

- Two minute papers video!

- Project page

- ECCV 2020 short (4-minute) and long (10-minute) talk

- Blogs on nerfs (1, 2)

- An excellent explanation of the basics of ray tracing

Media sources

Feedback is highly appreciated so I can improve this (and future) posts. Please leave a vote and/or a comment (anonymous comments are enabled)!